Natural language processing is one of the hot topics in the industry of machine learning. It has a lot of applications in many industries around the globe, starting from media to banking industries. Data is numbers is easily interpretable for making decisions but if they come in the form of text? that's where NLP plays an important role. One of the most pivotal concept of the NLP world is sentiment analysis, it is widely used by many around the world. For example sentiment analysis of a finance article will give us an idea of whether to invest in a certain firm or not. Sentiment of a user's tweets will give us an idea to hire him/her for a firm. Let us look 5 easy ways by which you can create a simple sentiment analysis tool

The text that we have analysed is

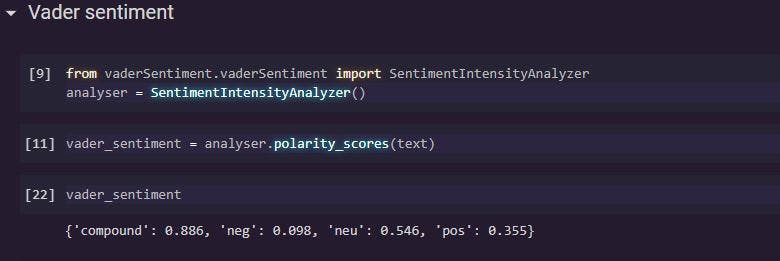

Vader Sentiment

Vader sentiment is an unsupervised sentiment analysis tool for detecting the sentiment for a text input. It has a dictionary with polarity scores for a list of words. Cumulative score of scores of these words is used to get the polarity score for the input.

The compound score gives the cumulative sentiment value of the text. We can set thresholds for the compound scores to classify the sentiment of the article.

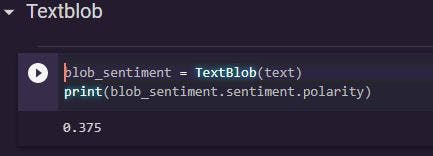

Textblob

Analysing sentiment using Textblob is also an unsupervised classification method. We can either use a naive Bayes classifier or bag of words method to get the sentiment of the input text.

The polarity score indicates the sentiment of the article, a highly positive score indicates the sentiment is positive and vice versa for the negative.

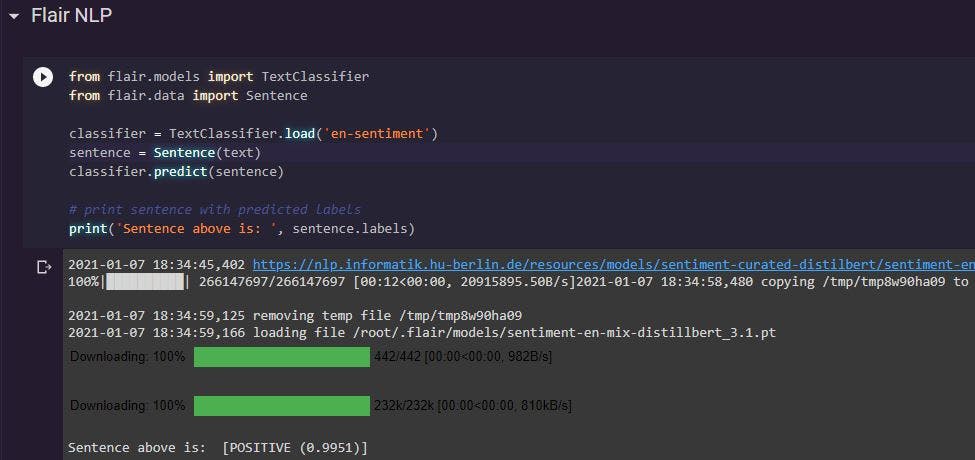

Sequential models

Sequential models are predominantly used in supervised language processing tasks. Initially RNNs were implemented for solving simple tasks with text of short length. Then with inventions of LSTMs problems with long sequence texts are resolved. Flair NLP is a python wrapper with a lot of implementations of different types of models, let us use one of the pretrained sequence models to get the sentiment.

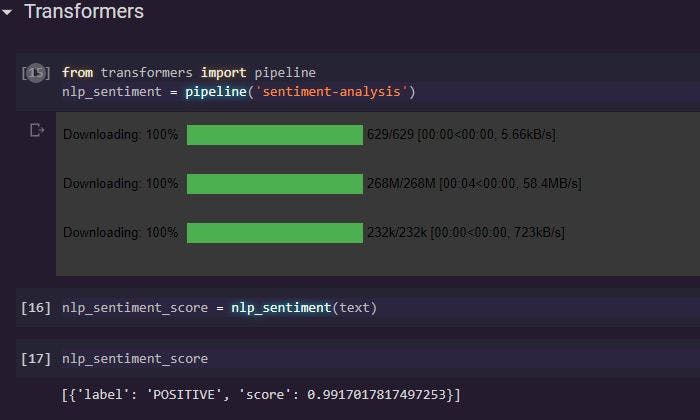

Transformers

Transformers are the new ruler in the NLP world which replaced sequential models in many scenarios. Transformers are alike to encoder-decoder based network with attention layers at the end to make the model learn efficiently with the relevant context of the text. Below is an example with pretrained model of BERT

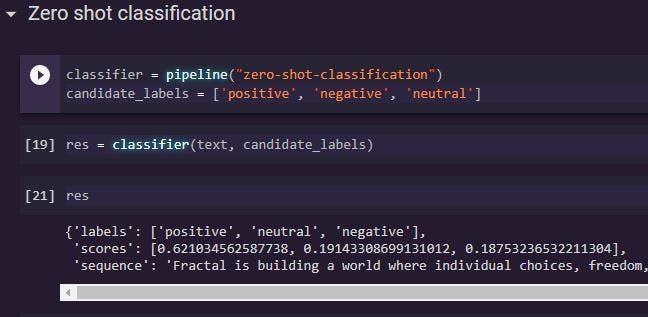

Zero shot learner

Move aside transfer learning, zero shot learner is here. This is one of the most advanced and recent algorithm in implementing some machine learning tasks. The difference between transfer learning and zero shot learning is you won't be requiring a lot of data to make the model learning in the latter one. Basically in the case of text classifier the model will be trained on a different set of labels

Thanks for you spending your valuable time on this, keep looking into this blog for cooler stuffs on machine learning.